Your cart is currently empty!

No, ReviewMeta’s not ready for Prime Time – at least for books

ReviewMeta’s an interesting idea. Analyze product reviews and try to weed out the bought and paid for. Lord knows that’s an issue — and one that makes the review system on sites like Amazon less useful for everyone.

The problem is data analysis is hard — facts are facts, but what those facts mean, well, that’s another animal.

David Gaughran took a look and twitted up a storm about it. Then ReviewMeta responded with a blog post. Fair enough, and a relatively common internet kerfuffle.

The whole thing drew my attention and I decided to take a look at a few books — my own first in series, David Weber’s On Basilisk Station, and Nathan Lowell’s Quartershare. I know for a fact that my reviews are organic and reasonably suspect Weber’s and Lowell’s are too.

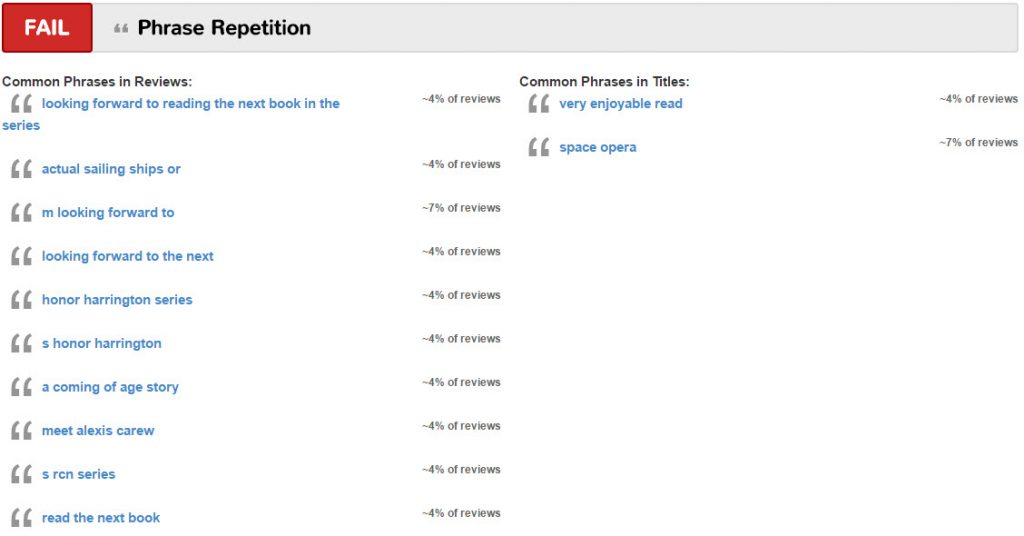

These three titles progressively show what’s wrong with ReviewMeta’s analysis, at least for books. First, they flagged Into the Dark, with a fail for word repetition:

Is there really any surprise that reviewers would repeat these terms when reviewing the Alexis Carew series?

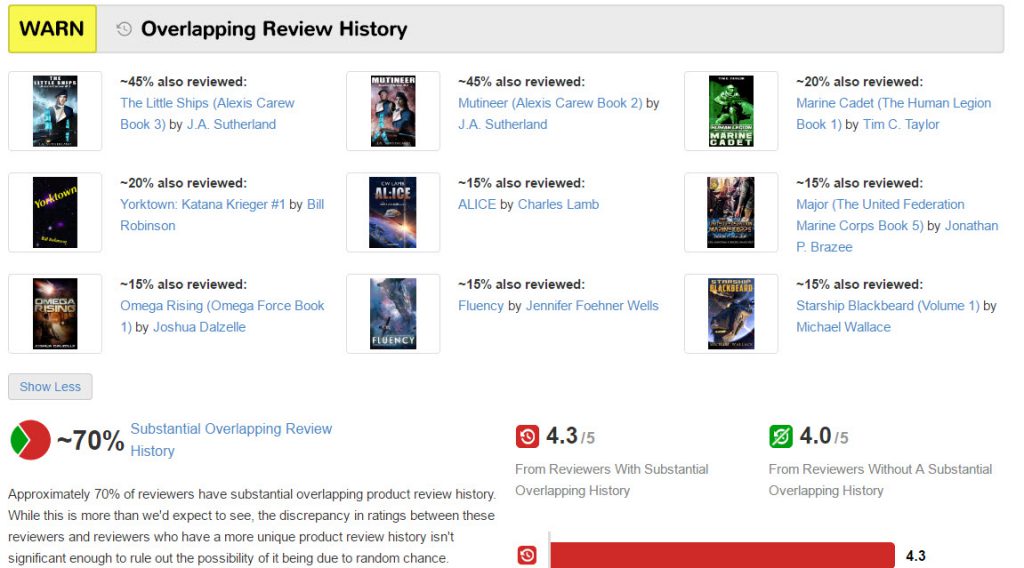

Then there’s the “overlapping review history”:

Curiously, readers of a space opera / military scifi series also reviewed other science fiction. Go figure.

The results are even more disturbing in Weber’s and Lowell’s series, especially with Lowell’s Solar Clippers series, where ReviewMeta “fails” the title.

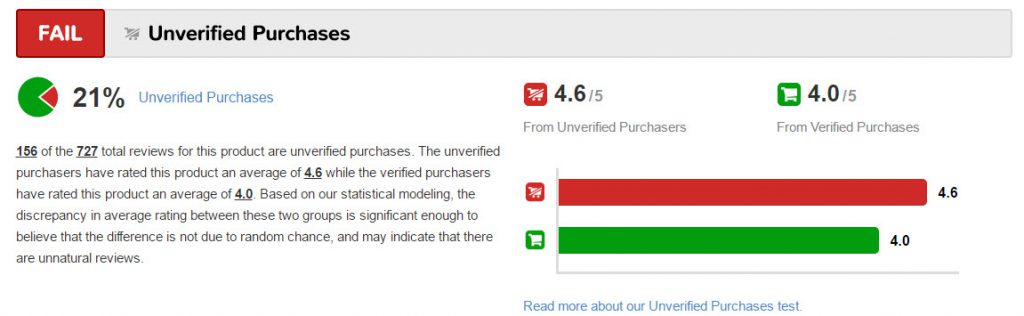

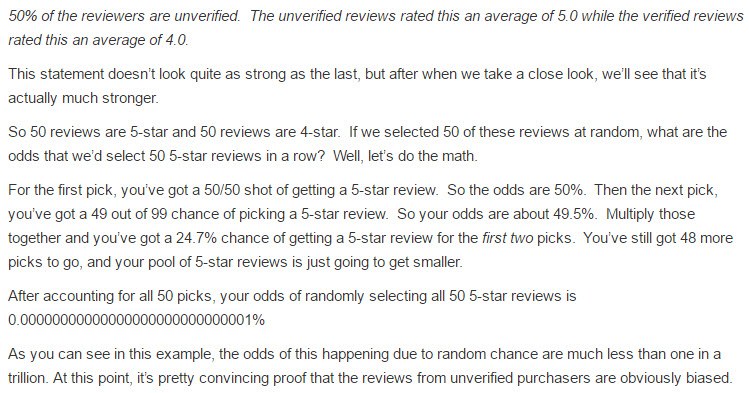

One reason given is that there’s a high ratio of unverified purchases:

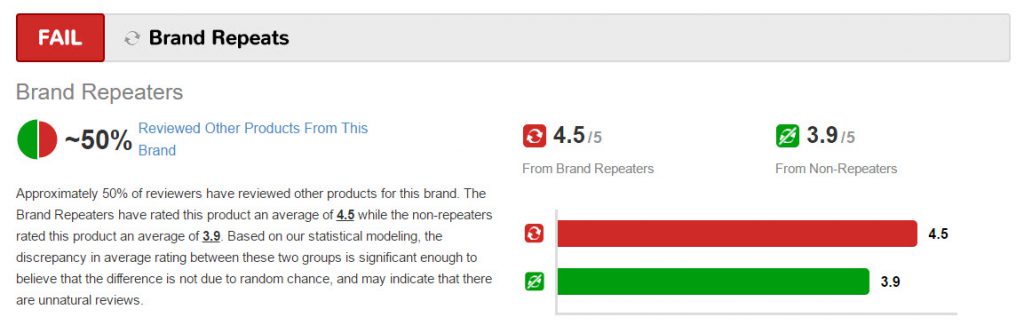

Well, Quarter Share is in Kindle Unlimited, and KU readers don’t get the verified flag when they review. And then there’s that brand thing again, but with a wide range between “brand repeaters” and not in the rating:

The Solar Clippers series is pretty polarizing — many people love it and many don’t. So is there any wonder that there’d be a large delta between those who read the rest of the series (repeat the brand) and those who don’t?

There’s a bit more of an issue in their analysis itself, which shows in their description of their methodology:

Now, at first blush that makes a lot of sense — but I’m heading for HonorCon next month. I’ll be promoting my book to David Weber fans at a military scifi convention. These are my people, they’re more likely (please god) to like my books. It’s entirely plausible that there’d be a large influx of high reviews following that convention, as opposed to a streak where I don’t go to conventions and the books are found randomly on Amazon or through Facebook marketing.

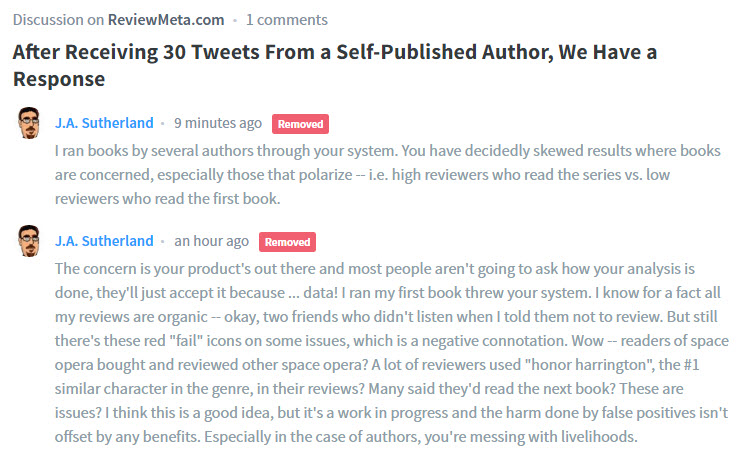

Anyway, I tried to make a couple comments on ReviewMeta’s blog post, but they decided they didn’t care for the feedback.

So for authors, ReviewMeta has some serious issues and we should be concerned if people start using it to analyze our books. For other products it might actually be a useful tool, and book series may not be something they took into account in their analysis — but this is a prime example of why you should look at how something’s analyzed and not just trust that the data-guys got their stuff right the first time.

Update 9/28/16: This morning I ran the top ten kindle books through ReviewMeta: 5 fails, 3 warns, 2 passes. Nope, just nope.